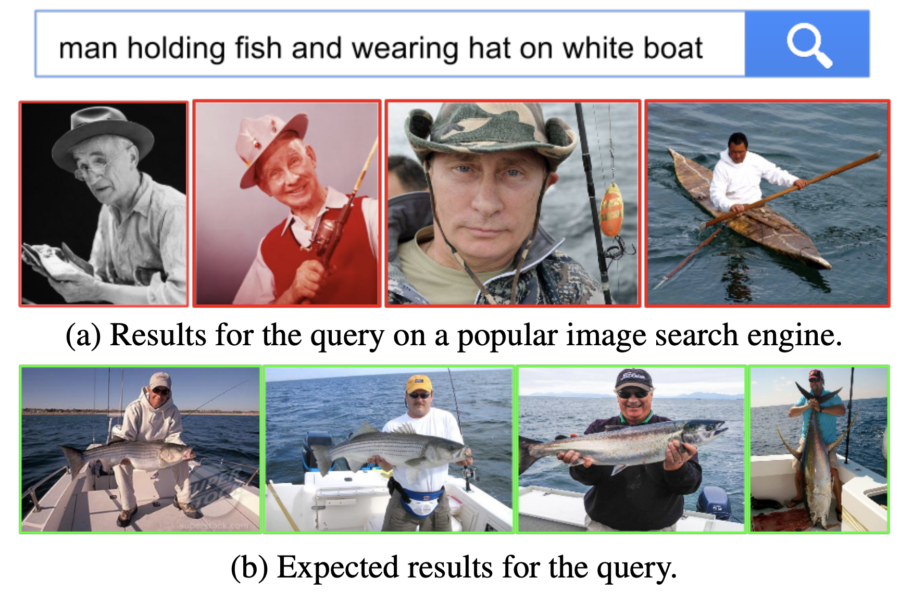

Image search using a complex query like “man holding fish and wearing hat on white boat” returns unsatisfactory results in (a). Ideal results (b) include correct objects (“man”, “boat”), attributes (“boat is white”) and relationships (“man on boat”).

Authors

Justin Johnson, Ranjay Krishna, Michael Stark, Li-Jia Li, David Ayman Shamma, Michael Bernstein, Li Fei-Fei

Abstract

This paper develops a novel framework for semantic image retrieval based on the notion of a scene graph. Our scene graphs represent objects (“man”, “boat”), attributes of objects (“boat is white”) and relationships between objects (“man standing on boat”). We use these scene graphs as queries to retrieve semantically related images. To this end, we design a conditional random field model that reasons about possible groundings of scene graphs to test images. The likelihoods of these groundings are used as ranking scores for retrieval. We introduce a novel dataset of 5,000 human-generated scene graphs grounded to images and use this dataset to evaluate our method for image retrieval. In particular, we evaluate retrieval using full scene graphs and small scene subgraphs, and show that our method outperforms retrieval methods that use only objects or low-level image features. In addition, we show that our full model can be used to improve object localization compared to baseline methods.

The research was published in IEEE Conference on Computer Vision and Pattern Recognition on 6/15/2015. The research is supported by the Brown Institute Magic Grant for the project Visual Genome.

Access the paper: http://cs.stanford.edu/people/jcjohns/papers/cvpr2015/JohnsonCVPR2015.pdf

To contact the authors, please send a message to ranjaykrishna@cs.stanford.edu