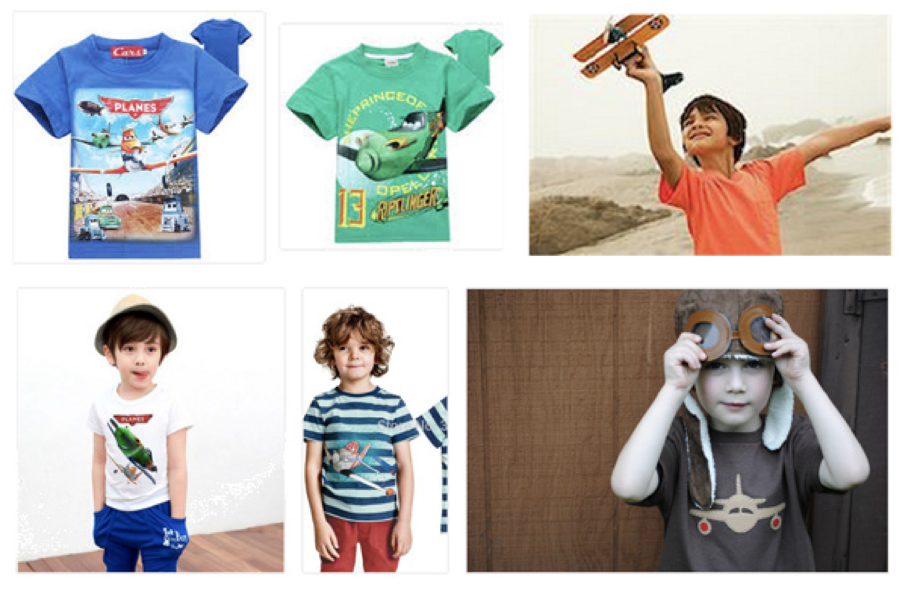

Actual results using a popular image search engine (top row) and ideal results (bottom row) for the query a boy wearing a t-shirt with a plane on it.

Authors

Sebastian Schuster, Ranjay Krishna, Angel Chang, Li Fei-Fei and Christopher D. Manning

Abstract

Semantically complex queries which include attributes of objects and relations

between objects still pose a major challenge to image retrieval systems. Recent work in computer vision has shown that a graph-based semantic representation called a scene graph is an effective representation for very detailed image descriptions and for complex queries for retrieval. In this paper, we show that scene graphs can be effectively created automatically from a natural language scene description. We present a rule-based and a classifierbased scene graph parser whose output can be used for image retrieval. We show that including relations and attributes in the query graph outperforms a model that only considers objects and that using the output of our parsers is almost as effective as using human-constructed scene graphs (Recall@10 of 27.1% vs. 33.4%). Additionally, we demonstrate the general usefulness of parsing to scene graphs by showing that the output can also be used to generate 3D scenes.

The research was published in Empirical Methods in Natural Language Processing – Vision and Language Workshop on 8/17/2016. The research is supported by the Brown Institute Magic Grant for the project Visual Genome.

Access the paper: https://nlp.stanford.edu/pubs/schuster-krishna-chang-feifei-manning-vl15.pdf

To contact the authors, please send a message to ranjaykrishna@cs.stanford.edu