On August 14, 2020, the tech outlet Engadget featured recent research produced by the Magic Grant ‘Synthesizing Novel Video from GANs’ developed by grantee Haotian Zhang, alongside Cristobal Sciutto under faculty direction from Maneesh Agrawala and Kayvon Fatahalian.’

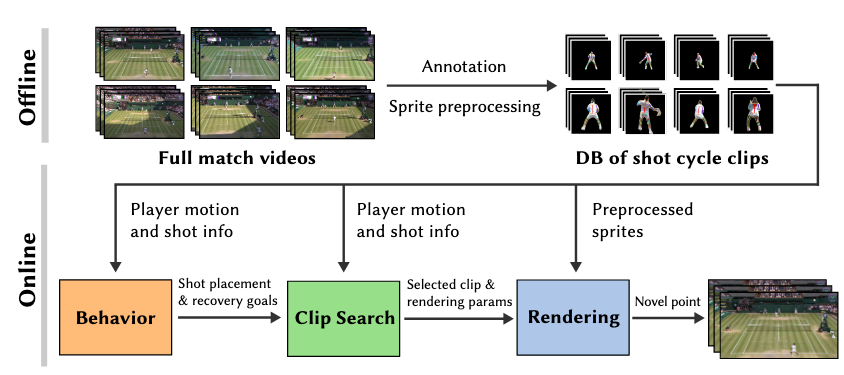

The article titled “These AI-generated tennis matches are both eerie and impressive,” describes the system developed under the grant which converts annotated broadcast video of tennis matches into interactively controllable video sprites that behave and appear like professional tennis players.

The team trained their AI using a database of annotated footage. The cyclical nature of tennis helped them create a statistical model that predicts how stars like Novak Djokovic and Roger Federer will play in certain situations… It can even extrapolate how a match may have played out differently had a single shot landed in a different location. What’s more, the system allows you to control a player’s shot placement and recovery position, so there’s the potential a studio could adapt it for gaming.

Research and more information from the project can be found on the Vid2Player site.

Image: System Overview: Offline preprocessing prepares a database of annotated shot cycle clips. The annotated clips are used as inputs for modeling player behavior, choosing video sprites that meet behavior goals, and sprite-based rendering